by Blair Crossman

Introduction

This blog post is for the ambitious network administrator who has been promoted to head of information security and wants to demonstrably improve the organization's security in the first 90 days or so. I will talk about how to leverage your existing network administration knowledge right now in your security role without needing a bunch of new certifications and learnings.

Please join me as I talk about what I refer to as Information Security BASICS, the most impactful, efficient steps you can take to improve your organization's security posture:

- Backups

- Apply patches

- Segment networks

- Isolate legacy technologies

- Configure and harden services

- Scan your assets

Backups

Disaster recovery is important, and a valid backup is essential to effective recovery. Computers and other network devices break for a variety for reasons, from power outages to water damage to simple malfunctions, so having a recent backup of important data is essential.

Many organizations rely on backup utilities that serve what are known as hot backups because databases are active and online during backup. Data may be copied to multiple online backup devices under the assumption that not all backup devices will malfunction at the same time - a good assumption for physical disasters, but a poor one when dealing with malware, ransomware, and other threats that can spread to the backups. Hot backups have their limits. Have you heard of an organization that suffered a ransomware attack but was unable to restore regular operation because they had only hot backups which, because they were online, were also encrypted?

To recover from such attacks against your infrastructure, you need cold backups which can't be encrypted or impacted by malware because they are offline. Cold backups are frequent snapshots of critical data and files written to tape or some other type of resilient media. These backups are then stored in a separate physical location or multiple physical locations, such as a contracted secure tape vault service. A truck will drive up to your business with a locked case like a safety deposit box where you stash your backup media. The service will ensure that your tapes are safe from physical risks like flooding and fire.

I suggest having multiple sets of media that you swap in and out of the vendor storage, so you can simply swap the tapes. The limitation of restoring from cold backups is that you will lose any data created after the most recent backup. It is important to have several staggered copies of your data. How many copies, and how far into the past you wish to go depends on your organizational needs, but a good rule of thumb is you should have multiple recent backups. For example, you may create a backup for yesterday, last week, and one, two, and three months ago. If you were to need to restore from that backup, you’d lose one day, one week, or up to 3 months of data respectively.

Now, all the backup solutions in the world may not save your critical data if you do not practice restoring your critical services from backups and confirm the integrity of restored files. A simple drill for this is to attempt to create a replica of each critical service with only your backups. Then use DNS to redirect traffic to the new replica. If there is no impact to the business, good news you have a successful backup. It is rare to get this right on the first try. Usually, you’ll find yourself having to go through several iterations of this process to fine tune your restoration protocol.

Remember the CPRs of backups; copy, protect, and restore. Have a plan and schedule to create copies (backups) of your critical data. Protect at least one backup by keeping it offline. Restore your critical services from backups until you get it right. Just like in elementary school when you did fire drills, you need to practice restoring your critical systems to prepare for an event you hope will never happen.

Apply Patches

I recommend applying all known vendor patches, especially critical patches, to your software as soon as you have tested them. Software updates are a race between you and your future attackers; you have to patch before the attackers have a chance to exploit a weakness, so be diligent in applying patches. Most network attacks are against known vulnerabilities, and few attacks are against new zero-day vulnerabilities.

Vendors issue critical security patches when a programming flaw is under active exploitation, or such exploitation is expected shortly after the patch is issued. Malware writers look at what a patch does, and then reverse engineer malicious payloads that will exploit any unpatched systems. This causes a race between attackers and enterprises. The trick here is to build a critical patch management process that allows you to win this race.

Sometimes critical security vulnerabilities are released during important customer windows which may create delays. No one wants to make changes that could result in downtime during monthly reconciliation or during the highest-revenue month of the year. Everyone is doing their best at such a time, and hearing vendors tell you to simply trust their patch is generally useless advice.

As with backups, the key to effective patching is testing to avoid the risk of a negative impact. You need to establish a path to expedite critical patches. Create working copies of your critical systems, patch them, and test the patched system by pointing traffic to it. If the patch behavior causes business impact, switch back to the unpatched copy, do some research, and iterate on the patch process until you get the patched copy works for your environment. In the case of software that has license limits that prevent you from creating a patched critical system, you need to consider your vendor's track record on patch implementation.

When concerns about potential downtime make a critical security patch hard to implement, it is important to search for steps to reduce risk until you can patch. Most critical security issues have a write up about how to work around the issue until patching can be performed because vendors recognize their customers' need for stability and want to help. For instance, you might need a different configuration to avoid vulnerable code or you might need to turn off certain features. The only definitely wrong move is to make no changes while you wait for a patching window; that is how organizations are compromised by network attacks.

Segment Networks

Like how a home will have rooms with lockable doors, a network needs to be partitioned to protect privacy, with minimal holes in the walls to provide needed functionality, for instance for administration. Network segmentation has many advantages. It limits what attackers are able to do when they compromise a machine, and if done correctly can make some avenues of exploitation difficult.

Unlike patching which is reactive, segmentation is a proactive step that can be taken to protect your network. Segmentation is the first step in setting up more advanced network monitoring. Poor segmentation will render an intrusion protection system and other network security devices useless.

Going back to the network as a house example, you want to create separate subnets as rooms which encapsulate a type of computer user. A good starting point is to separate your subnets by use case.

For instance, subnets for workstations could be thought of as bedrooms. Network administration machines are like central air: They can push network traffic into bedrooms, but rooms will not be pushing air back into the air ducts. Controls should be set up that prevent subnets from initiating outbound connections to the administration network.

File shares and other important business utilities should be treated like a kitchen. People who live in your network/house should have access, but random strangers and guests who just want to snoop through the drawers should not.

You also have a living room, which is the most permissive part of your network. Guest WIFI goes here if you have such a convenience. Guest WIFI should be segmented from all the management, business, and employee networks. You should implement firewall rules that enforce the behavior you would expect from a guest in your living room. Do not allow users on the guests wifi access to business data, file shares, or management networks. Think of it as restricting a guests access to private spaces.

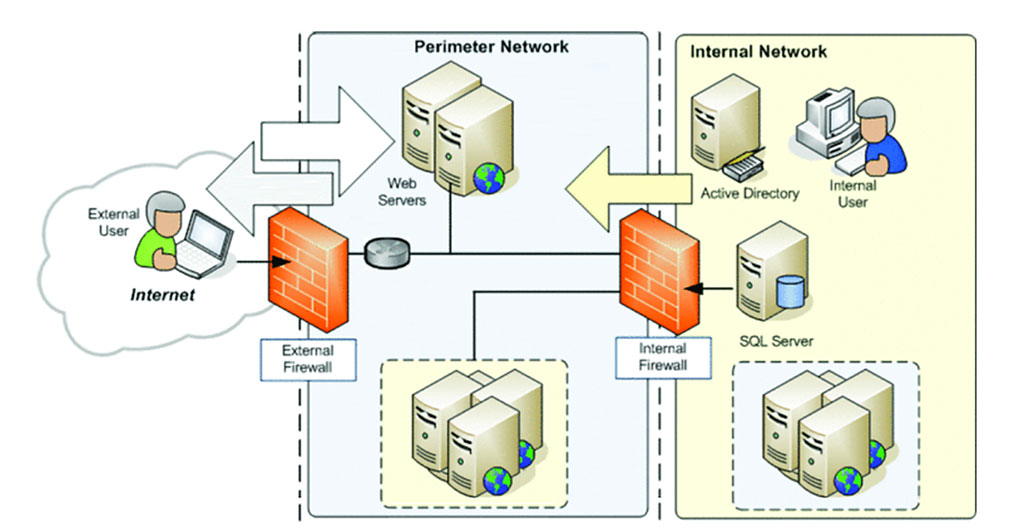

Finally, let's have a brief conversation on how to deploy web applications and other services locally or in the Cloud. Local deployment is not as common as it once was, thanks to the rise of cloud services. Some of my segmentation suggestions above also apply to cloud deployments and security groups. What you want with a web deployment is a set of one-way connections. Clients should talk with web servers. Web servers should only talk with databases or other needed back-end data stores. This idea is frequently referred to as a DMZ (which stands for demilitarized zone) because hostile traffic is probably going to be sent to your web servers from the outside world. The main trick here is to only allow your web/DNS/mail servers to make outbound connections to needed internal services and only allow your backend servers to have outbound connections to whichever hosts supply them with data. Block all other outbound network traffic from these servers and then only allow related and established connections. Most especially your databases should not be able to make new outbound connections to the web server, employee networks, or the internet at large. Work to implement the minimum number of inbound firewall rules needed to keep everything running.

Isolate Legacy Systems

Organizations should not have old, unsupported machines on their networks. It happens anyway, for a variety of reasons. A network administrator who is forced into this situation must take prudent precautions to protect the rest of the organization. When stuck with a legacy device, it is important to remove it from any centrally managed system. In an Active Directory environment for example, you must unjoin these machines from the domain and use unique credentials to access and administer them. Legacy assets need to be locked into their own very limited subnets. Firewall rules should be used to prevent almost all access to these machines. Remove access to any file shares or other shared resources on legacy machines and discourage or deny new access.

The idea here is to maroon legacy devices on a lonely desert island and work to remove them as soon as is possible. You may be able to expedite the upgrade process if the increased cost of isolation (for instance, through slowdowns) is comparable to the cost of an upgrade. It can be quite tricky to navigate the correct balance but try to err on the side of security with legacy devices. Emphasize to your managers that the system cannot support new use cases due to its older unstable nature and the need to isolate it.

Configuration

From a security standpoint, configuration is all about hardening. This is the process of initially configuring the services in your network to use the minimum number of features to get the job done. Frequently this can be a frustrating iterative process. I’ll be honest: When considering all of the BASICS, this will probably be where you spend most of your time. Correctly configuring a service can be as much about knowing your workloads as following a configuration guide. The trick here is to find good configuration guides.

If you are a Windows shop, Microsoft has wonderful hardening guidance for various services. If you are a Linux administrator there is also a lot of documentation, though you’ll have more to sift through to find things that match your use cases. When you are just starting out, I also suggest the Center for Internet Security (CIS) benchmarks https://www.cisecurity.org/cis-benchmarks/. The benchmarks are straightforward comprehensive guides to hardening your services. I will throw out one caution: The CIS benchmark provides only one of many secure configurations for any given service. You may need to change things up as you learn about the services you are administering, and the people who use them.

Scanning

This section is intended for those who have completed the first five (BASIC) steps. When you’ve made your best stab at all of those, it’s time to think about running a commercial vulnerability scanner. Although you may believe you have already patched the vulnerabilities in your network, these products use benign versions of well-known attack payloads to test your network for commonly exploited vulnerabilities. These products are not without their limitations, but they can help find vulnerabilities you were unaware of and prioritize your patching efforts. My personal suggestion is Nessus owned by Tenable, but other good network scanners in this space include Qualys and Nexpose.

As you get started, don’t focus too much on the complicated vulnerability management features of these products. Instead focus on these simple rules.

- If a vulnerability is red, make it dead. In other words, patch critical and high severity vulnerabilities first.

- If it is TLS related and on your internal network, do it last. This includes vulnerabilities like self-signed certificates, deprecated cryptographic algorithm, TLS 1.0 or TLS 1.1 in use, and others.

Conclusion

I hope the six steps of the Information Security BASICS have provided some clarity about your organization's security posture and its top security needs. The BASICS provide you with impactful, efficient steps that leverage your experience as a Network Administrator so you can gain traction during your first 90 days in your Security role, earning a reputation as a Security leader who knows how to get things done with your direct reports as well as your Executive Team.

Having established a security foundation with the BASICS, you can consider how to implement other industry best practices which may require significant collaboration with stakeholders across the organization such as Data Classification and Asset Management.

Thank you for your time, and I hope that you found value in the information security BASICS. 😊

About the Author

A Senior Security Engineer at Anvil Secure, Blair has a fairly diverse IT and computer science background. His past career adventures include helping scientists manage super computers at Los Alamos National Library, writing software for Intel, and Security Analyst work at RiskSense. Blair holds a Bachelor’s Degree in Computer Science from New Mexico Institute of Mining and Technology. His research interests include network security, web application security, and woodworking.